You’ve probably heard of ChatGPT, the most recent advance in Natural Language Processing that is taking the internet by storm with over one million users in just five days!

Released only a few weeks ago, the free AI chatbot’s overnight popularity is due to its ability to answer and explain concepts plainly, or perform complex tasks such as writing an article or telling a joke. For web and mobile app developers, there are broad implications for automated testing since ChatGPT can write test cases in a wide range of frameworks and languages. Let’s explore the possibilities.

What is ChatGPT?

Created by OpenAI, ChatGPT is a large language model that was fine-tuned by supervised and reinforcement learning on large datasets. Using algorithms, ChatGPT analyzes the data to find patterns that help it understand natural language and how words are used in context. It is a dialogue-based model, which means that it is designed for a back-and-forth chat-like interaction. You can ask ChatGPT to say whatever you want that the system doesn’t consider offensive.

ChatGPT can generate creative and on-topic responses, often providing details on why it generates a specific response. It can also remember its previous responses to have a (mostly) coherent conversation. ChatGPT has been asked to generate everything from answers to questions on soil physics to writing folk songs about beer!

ChatGPT and Automated Testing

One of the most interesting features of ChatGPT to those of us in the software space is that it can generate properly formatted and relevant code based on a simple natural language request. It can generate code in many languages and can employ numerous built-in packages across those languages. So, the natural question is, can ChatGPT be used to generate code for automated testing?

The answer at this point is, “Yes, sort of.” ChatGPT can write Selenium in multiple languages, as demonstrated by Sauce Labs’ own Nikolay Advolodkin in his video, ChatGPT Can Code Better Automation Than Me.

But being able to write code that looks accurate is just the beginning. In an ideal world, one would provide ChatGPT with a description of the test to generate, it would know all the details about the version of the website one is testing, and it would generate perfect, executable code that needs no modifications. ChatGPT currently can’t do that. What it can do is still quite impressive though.

Let’s start with a use case for ChatGPT, not to completely replace testing engineers but instead as a new low-coding method.

What is Low-Code Testing?

Low-code development allows people to write code with little to no coding experience, using drag-and-drop development platforms or plain English. Low-code testing solutions lower barriers to writing test code by making it easier to scale development organizations. Since tests can be written without a technical skillset, low code is simpler for teams to write test automation code, reducing test debt.

ChatGPT can be viewed as a powerful low-code tool for writing test cases. ChatGPT accepts natural language as input, so users can write in their natural cadence and still be understood, unlike template-based models which often rely on particular language structures or key phrases. As we will show, ChatGPT does a remarkable job of generating test automation scripts, classes, and functions.

A Language for Low-Code: Cucumber

While ChatGPT can generate many languages and libraries, its sweet spot is its ability to generate Cucumber code. Cucumber is a testing framework that uses behavior-driven development. Scenarios are written in plain English, with some key terms like “Given,” “When,” and “Then” in a feature file. Then, in step definitions, these natural language phrases are associated with code.

Test maintenance is one of Cucumber’s benefits since Cucumber scenarios combine the natural language intent of a test with the automation code which implements it. This lets testers who aren’t as familiar with test code understand the linkage between test intent (in natural language) and test code. This leans into ChatGPT’s strength of generating natural language with associated source code.

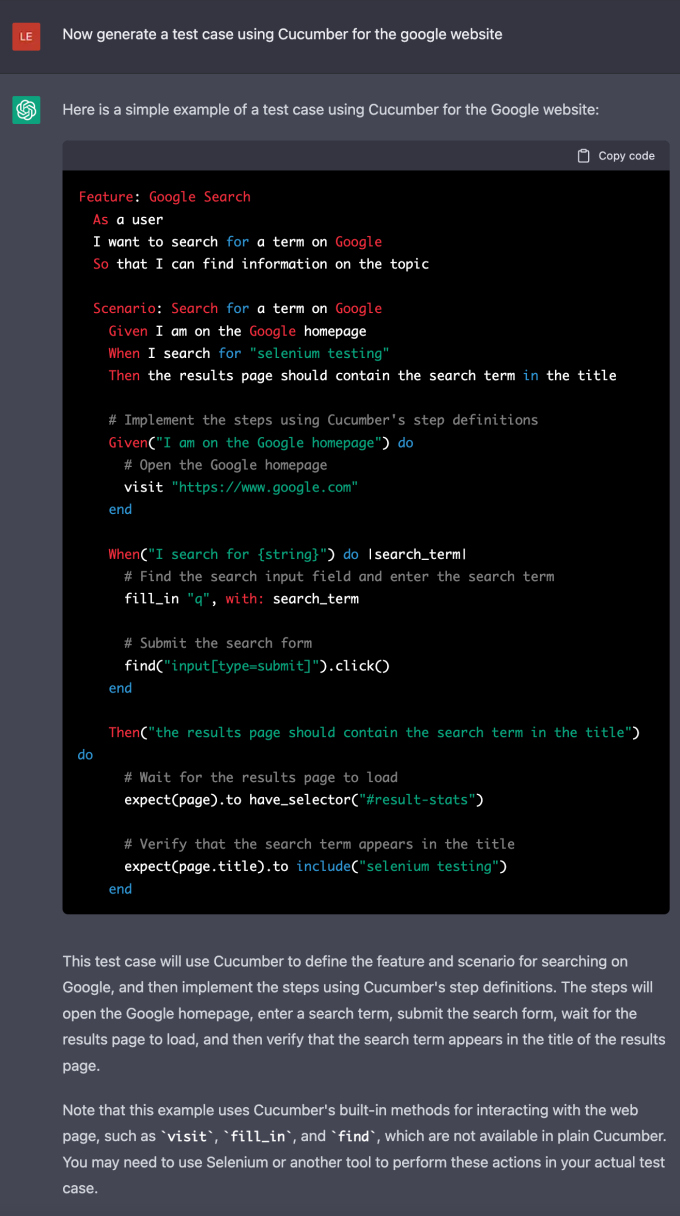

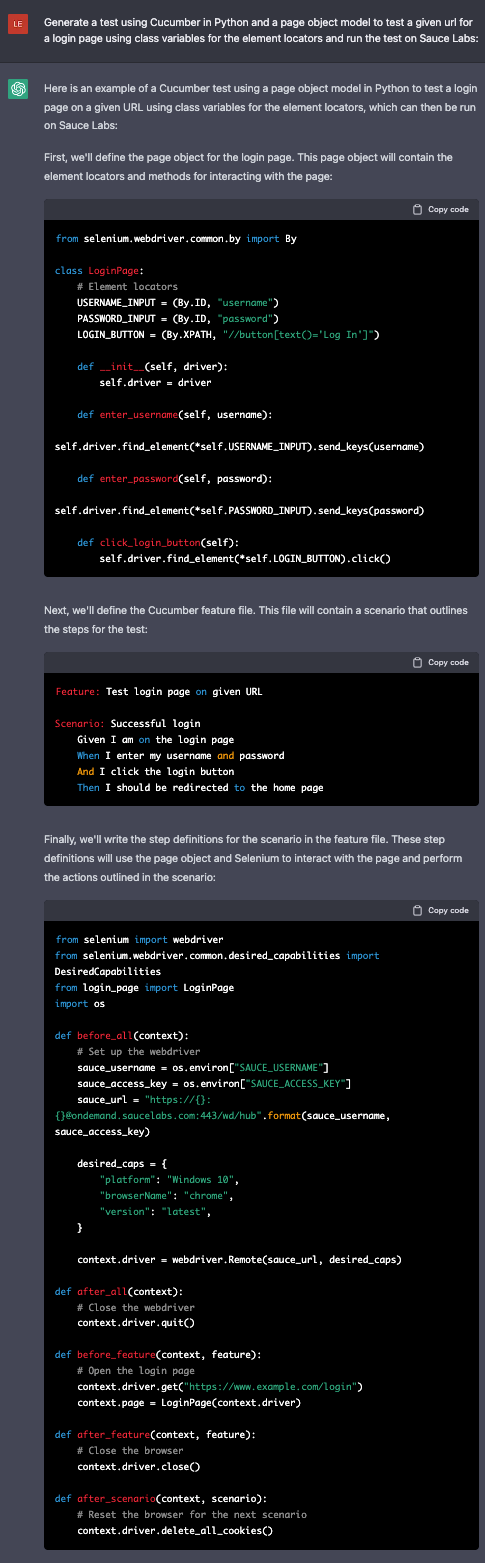

ChatGPT and Cucumber Test Case Example

Now let’s see what ChatGPT can do.

As you can see in the following example, ChatGPT’s ability to generate code is quite impressive. With a simple prompt, it can generate the scenario and the step definitions used by Cucumber all at once. It even knows the different components Cucumber needs to run without being explicitly told.

The prompt is vague about what to test on the website, but ChatGPT still generates a script to test one of the main features of the Google website, its search functionality. In this example, it even correctly identifies the name of the search bar for Google, “q.”

A General and Manageable Script

While this is a good example of ChatGPT’s ability to write code in general, not all websites are as ubiquitous as Google. Its accuracy in predicting the identifier “q” does not indicate it will always be correct. And, from testing ChatGPT, we know that if it doesn’t know an accurate element locator, it will make one up.

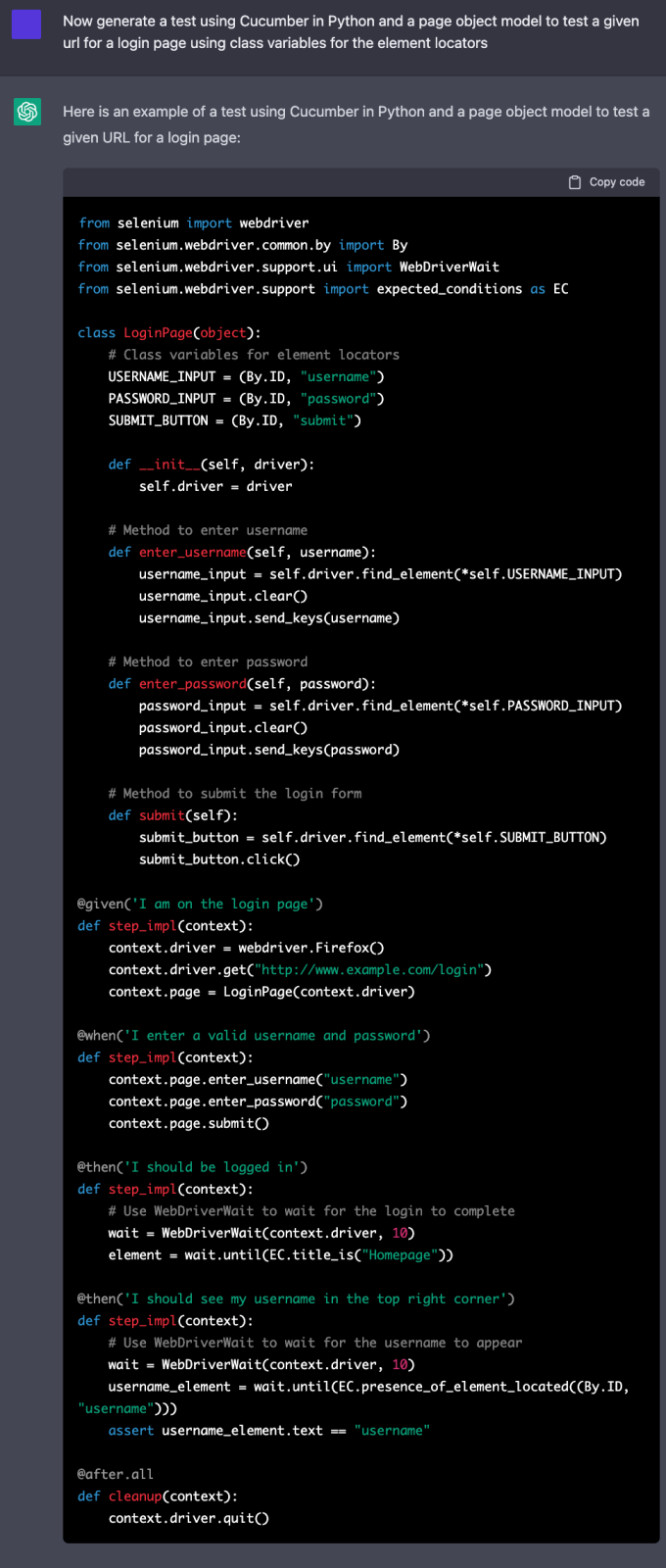

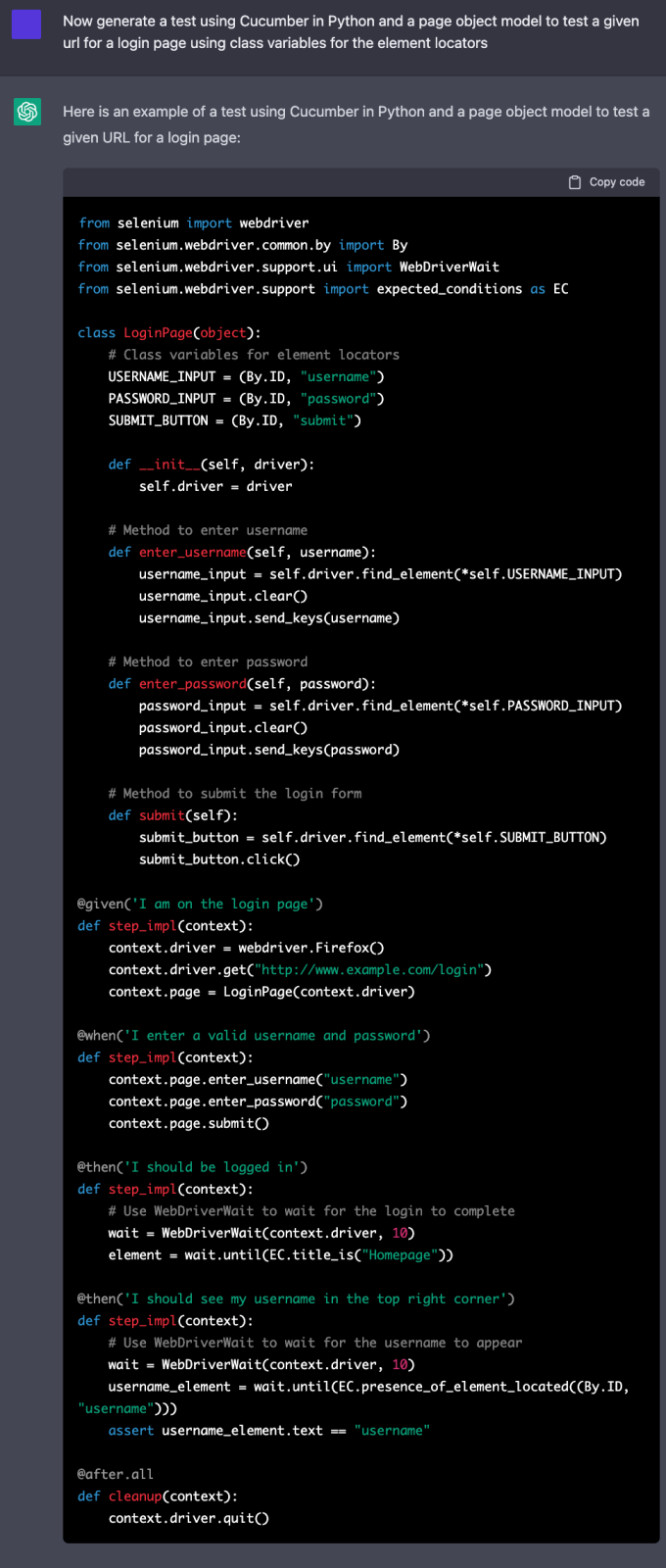

Going through the generated code and finding and replacing all element locators is tedious, but the problem can be made much easier by separating the code about the page itself from the test cases. We do this by using a page object model. Using a page object model also allows us to update the code if element locators or the structure of the app changes between tests, improving the maintainability of the testing script.

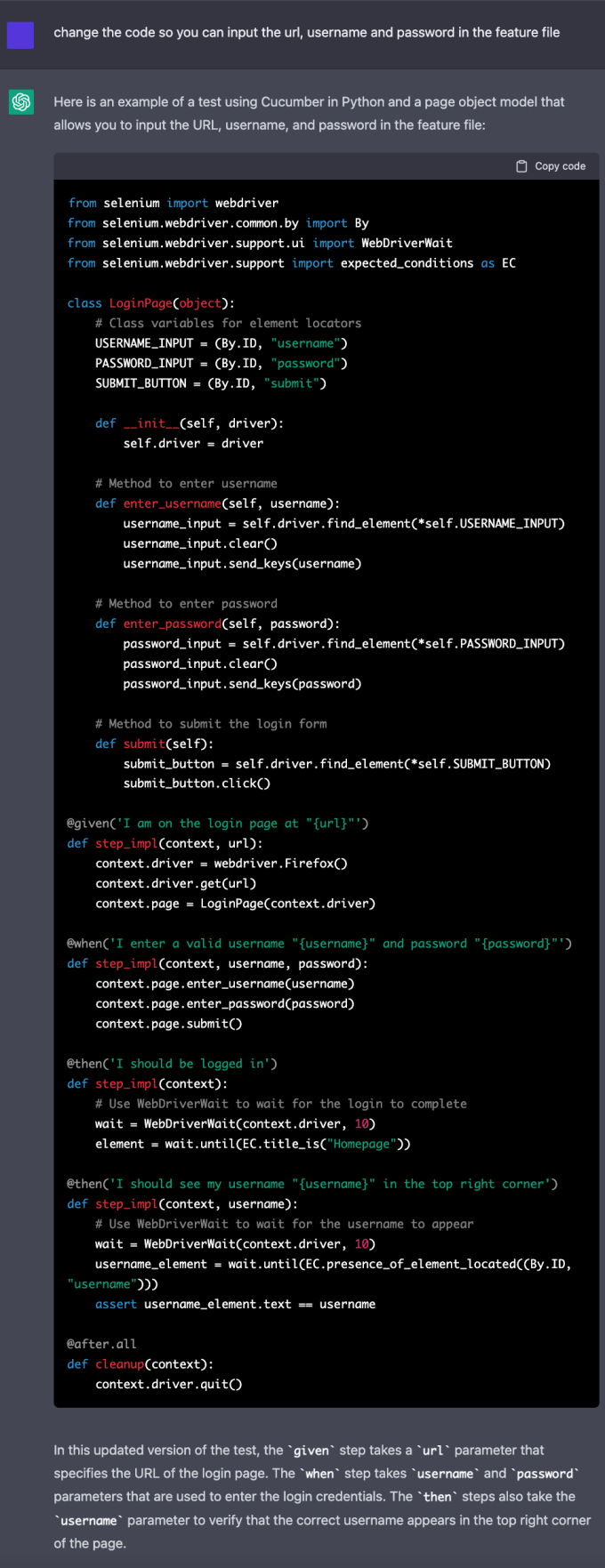

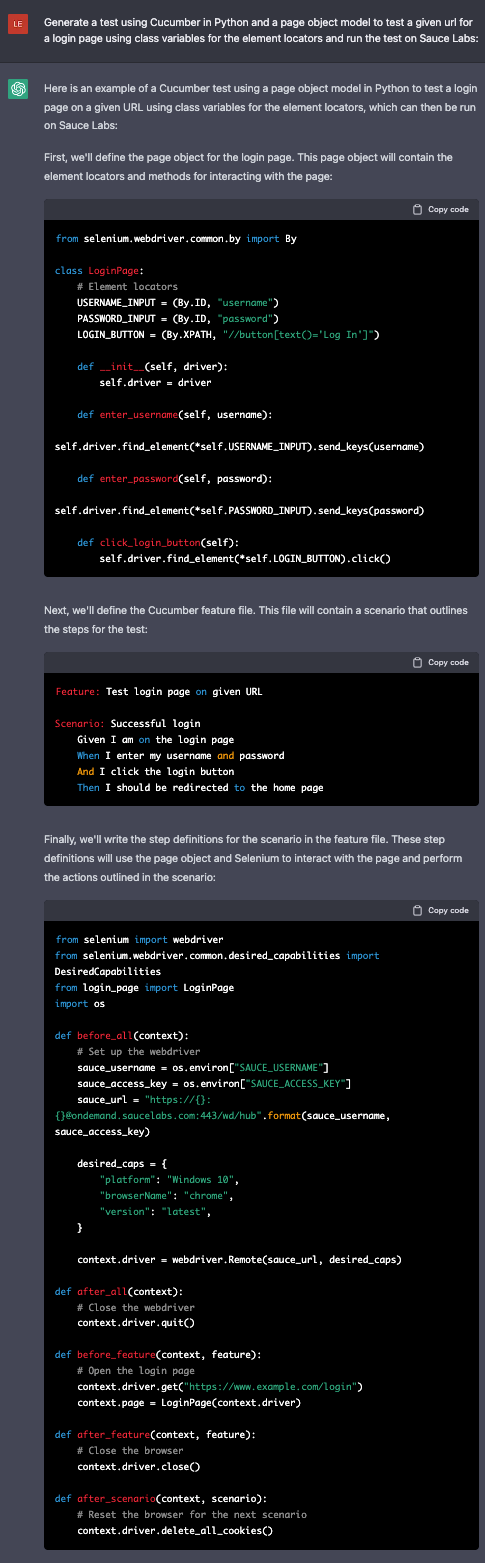

In the following example using Cucumber in Python, we generate a generic test for a login page of a website and instruct ChatGPT to use a page object model and class variables for the element locators.

ChatGPT correctly generated the aptly named LoginPage object which represents the element locators as class variables and has methods for the common functionalities on login pages, such as entering one’s username. The step definitions, at the bottom of the example, use the methods in the LoginPage to interact with the website.

Now, using the page model object and the step definitions, it seems as though a user could either automate (with ChatGPT) or manually write scenarios to test the website and have working test code! But will it actually work as-is?

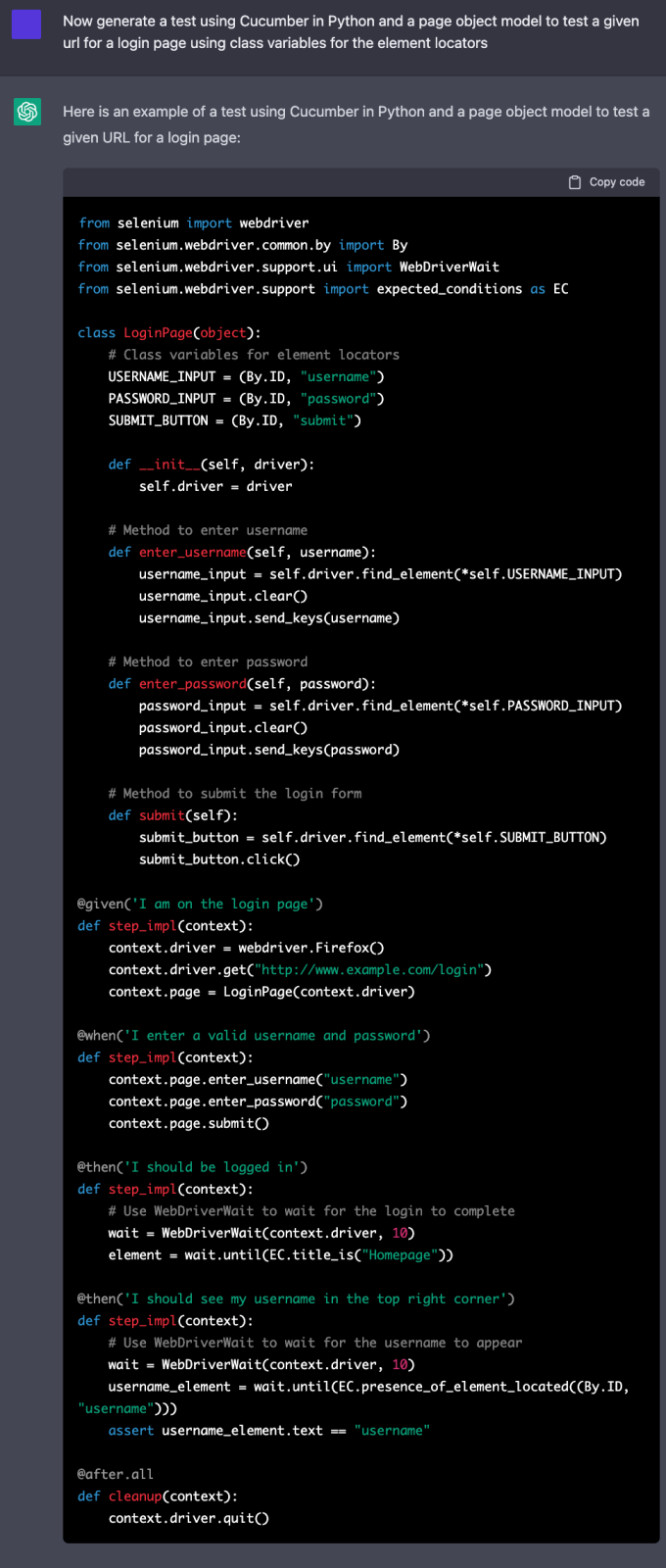

Using ChatGPT to Change and Fix Code

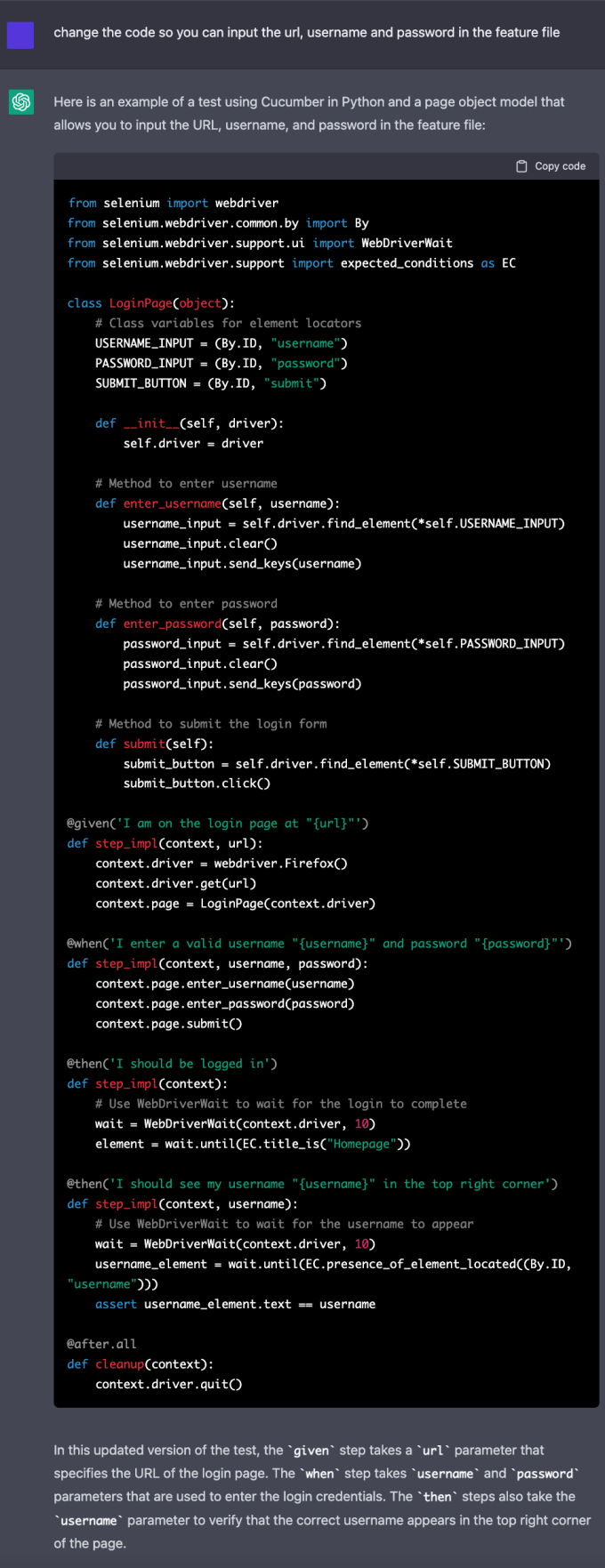

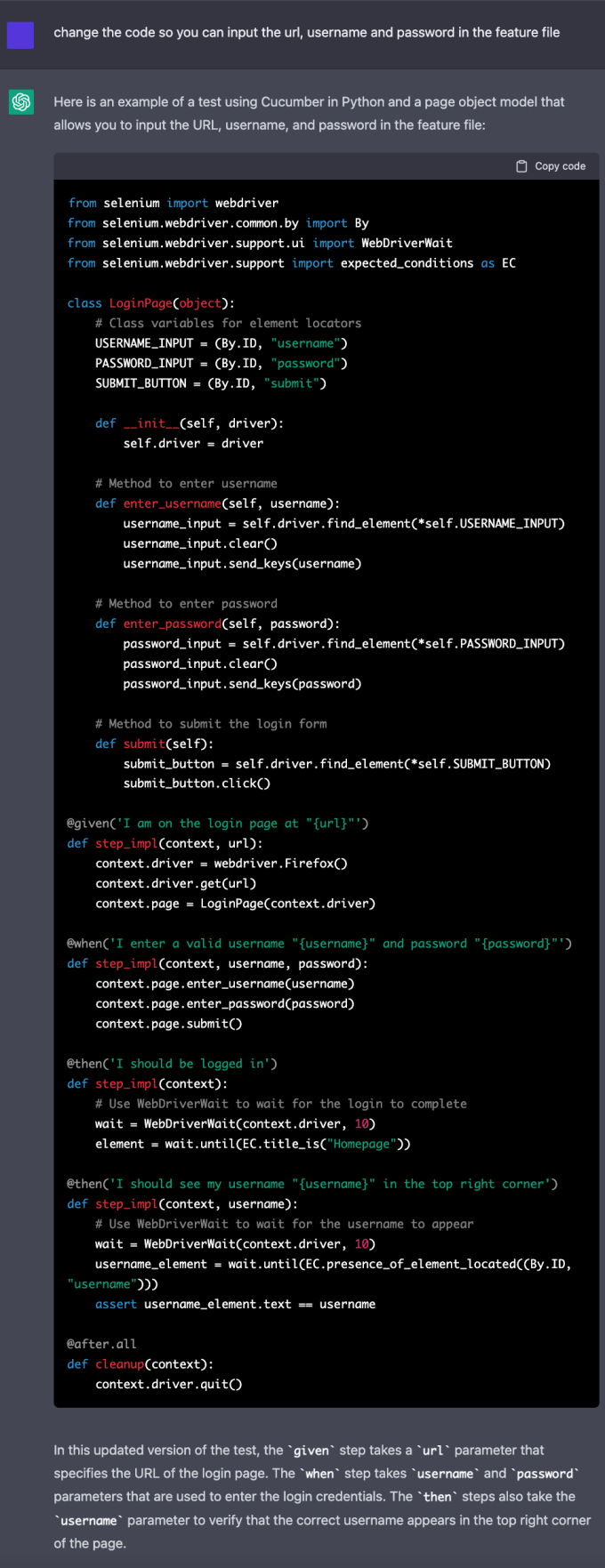

If you look closely, you can see all the input values for the test, such as the URL, username, and password, are all hard-coded into the step implementation. It is unlikely that the website you are testing will be “http://www.example.com/login” and that a working username and password combination will be “username” and “password” respectively. And these values do not need to be hard-coded; Cucumber can take variables from the feature file in the scenarios.

But what if you don’t know how this is formatted or don’t want to spend the time to update all the code? Well, you can ask ChatGPT to fix it for you.

By requesting ChatGPT to update the previously generated code, we can fix the problem. The updated step implementations read in the values that we requested instead of hard coding the most likely incorrect values. That is one of the remarkable features of ChatGPT: the conversational nature of the model allows you to tell the system exactly what you want to change in the code, and it is quite good at listening to and executing your requests.

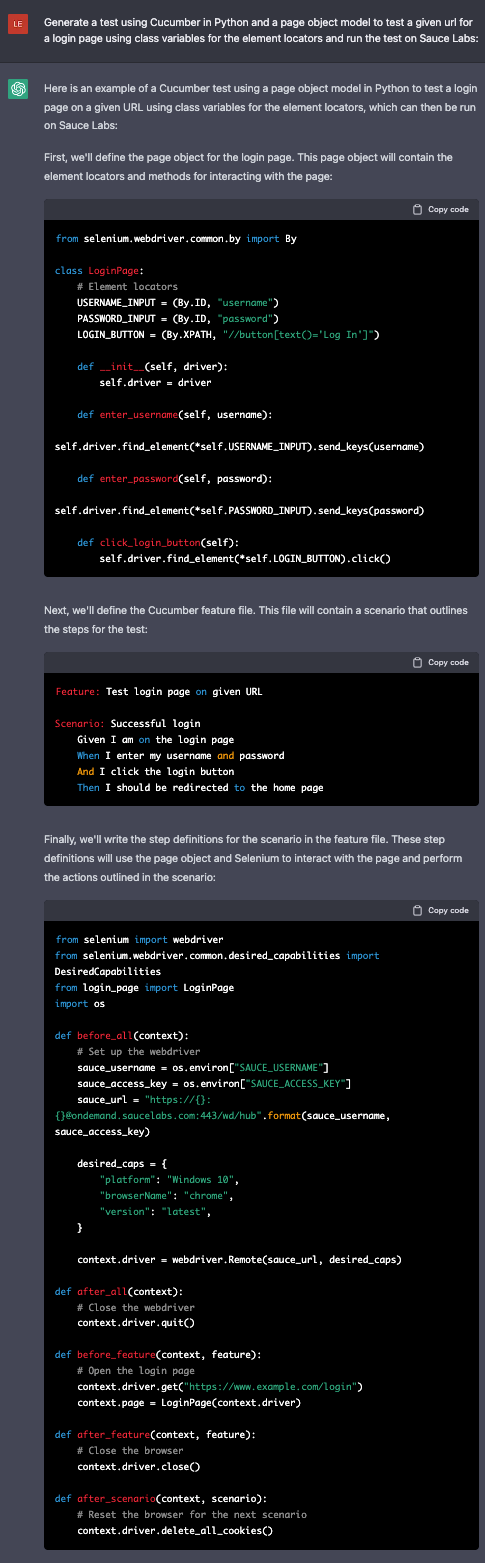

ChatGPT and Sauce Labs

ChatGPT can generate test scripts that are compatible with Sauce Labs. Since running your script on Sauce Labs requires completely updating how the test is launched using Selenium, having ChatGPT write an accurate startup method could be vital to a user without much coding experience. By just adding “and run the test on Sauce Labs” to the prompt we can generate a script that includes an accurate method to start the test.

The third code block includes the code to start testing using Sauce Labs. ChatGPT uses the correct URL, passes the necessary capabilities, and uses the correct driver method to start the test. While it arbitrarily decides which platform, browserName, and version to use, these are easily updated either manually or by telling ChatGPT to update these features. It is that easy to run a test generated by ChatGPT on Sauce Labs!

The Downsides of ChatGPT for Automated Testing

While ChatGPT has a lot of potentials to be a low-code solution for automated testing, it still has issues. Users need some understanding of the app under test and the coding language and packages being used when generating code since the system often needs to be told to correct issues. ChatGPT does not run the code itself and therefore has no way to know if the code generated is truly runnable. One recurring issue is that the Xpaths or IDs need to be manually updated to accurately locate the correct element, as ChatGPT not only does not know these identifiers, it will fill in random identifiers so that the output code is as complete as possible.

Also, we found the model does not know what methods have been deprecated. It repeatedly used the “find_element_by_*” method to locate elements on the screen, even though that method is no longer functional. Simply asking ChatGPT to update the code with the correct method is a reliable way to correct the problem, but it does require the ability to recognize what the issue is.

Another problem with the above code is the step description does not always accurately reflect what is being tested in the script. For example, the step “I should see my username “{username}” is in the top right corner” uses the method “expected_conditions.presence_of_element_located(),” which only checks to make sure that the element is present on the DOM of the page, not that it is located in a particular region of the screen.

Finally, ChatGPT will assume some common page structures in its test code. The previous examples have both the username and password entered on the same page before submitting, but some websites have you first input the username, then click a button like “Next” and then input the password. A user can use ChatGPT’s dialogic nature to correct issues such as these, but, again, one must be able to recognize they are an issue to request an updated, corrected version.

Conclusions

ChatGPT is a very powerful natural language model with enormous potential. What it can do is significant, and it likely will lead the way in low-code testing solutions to many problems. It has the potential to do that for testing, but one still needs to have a reasonable understanding of both the language being used and the app under test to make use of ChatGPT as it is. But we shouldn’t underestimate its potential: ChatGPT is truly impressive code generation that wasn’t possible with previous natural language generation models.

Source: https://saucelabs.com/blog/chatgpt-automated-testing-conversation-to-code