Learn from the Testing Experts

27th March, 2026

SEATTLE

Keynote

The Last Guardrail Why QA Is the Gate Between AI and Harm

When an AI system causes real harm—to a real person—who had the last chance to stop it?In almost every incident we study, the answer isn’t the model. It’s the absence of testable guardrails. The gate that didn’t exist.

The question nobody asked—because nobody knew it was their job to ask it.Here’s the uncomfortable truth: that job already belongs to QA. You’re already doing governance work—every edge case you flag, every “what happens when” question you ask. It’s just undocumented, unmeasured, and unowned.

Takeaways from this talk

This keynote isn’t about adding AI governance to your plate. It’s about claiming authority for what you already do—and understanding why it matters more now than ever. Through real cases (including the tragic Character.AI incident), we’ll explore what “testing for trust” actually looks like, and why QA doesn’t need a seat at the governance table. QA is the table!

Featured Speakers

Engineering Ethics: Building Safe and Responsible AI Through Quality Engineering

As AI moves from experimental to production systems, testing can no longer stop at accuracy and performance. Quality Engineers now play a pivotal role in ensuring that AI technologies are safe, transparent, and ethically aligned. This session explores how to extend QE practices into the AI lifecycle validating data, detecting bias, testing model behavior, and enforcing guardrails for fairness and reliability. You’ll learn practical strategies for embedding quality gates into ML pipelines, building explainability into automation, and monitoring AI drift in production. Join to discover how technical testers and engineers can become guardians of ethical AI using code, tests, and observability to earn user trust in intelligent systems.

Takeaways from this talk

- AI Testing Beyond Accuracy: Learn how to extend functional and non-functional testing to include ethical and behavioral dimensions of AI systems.

- Quality Gates for AI Pipelines: Discover how QE practices (data validation, model auditability, and bias checks) integrate into CI/CD for ML.

- Bias Detection and Explainability: Explore practical techniques and tools for surfacing hidden bias and improving model transparency.

- AI Safety in Production: Understand how to monitor AI drift, enforce human oversight, and test guardrails for real-world resilience.

- Ethics as an Engineering Practice: See how engineers can operationalize ethical principles using test automation, governance frameworks, and collaboration across teams.

Scalable automated frameworks for distributed systems

In today’s software landscape, automated testing is no longer optional. The challenge is that traditional automation strategies, built around monoliths and simple pipelines, collapse when faced with dozens of microservices, asynchronous message buses, and environments that shift by the hour. In my talk, I will show how to address this problem by building scalable end-to-end automation frameworks composed of six key components: testware management, a domain-specific language, resource management, reporting, orchestration, and testability hooks in the system itself. Along the way, I will share how declarative DSLs reduce fragility, how dynamic resource allocation prevents “works on my machine” failures, and why observability must be treated as a design contract between developers and testers.

Takeaways from this talk

Attendees will leave with concrete takeaways:

- How to think about automation as a product rather than a script, how to design frameworks that scale as fast as their systems do, how to apply these principles immediately to reduce flakiness, improve feedback loops, and ultimately deliver safer software faster. They will also learn how to position automation as a strategic enabler that bridges engineering practices with business outcomes, securing organizational buy-in for long-term quality investments.

From Shift-Left to Earlier Truth: Designing Feedback Loops

“Shift-left” is widely repeated, but teams often implement it as more gates and more end-to-end tests earlier. That frequently increases pipeline time, flakiness, and frustration without reducing risk. This session reframes shift-left as a feedback-system engineering challenge: deliver earlier truth with fast, reliable, owned, and cost-effective signals across the lifecycle.

Takeaways from this talk

Attendees will be able to:

- Translate “shift-left” into actionable outcomes: shorter time-to-signal and higher confidence.

- Use the Feedback Loop SLA model: time, fidelity, ownership, cost to evaluate tests and gates.

- Place feedback intentionally across pre-commit, pre-merge, post-merge, production, instead of forcing everything “left.”

- Build tiers of signal and avoid relying on UI E2E as the primary integration safety net.

- Detect and fix shift-left theater using concrete metrics: flake rate, time-to-diagnose, change failure rate, escaped defects.

- Apply a practical pipeline redesign checklist and a 30–60–90 day plan.

Fireside Chat Speaker

Millan Kaul

Milan Kaul is a Quality Engineering leader who builds and scales teams that deliver high-quality products customers trust and stay with. With nearly two decades of hands-on experience in quality engineering and software development, he has led initiatives ranging from $3M to $30M AAR across North America, Australia, Asia, and Europe.

He thrives in complex, ambiguous environments, turning business challenges into growth through data-driven decisions. His Quality Measurement Framework makes quality visible and measurable. Milan has driven impact across SaaS, Banking, FinTech, HealthTech, and Fitness Tech, and is a frequent international conference speaker and certified Agile and cloud practitioner.

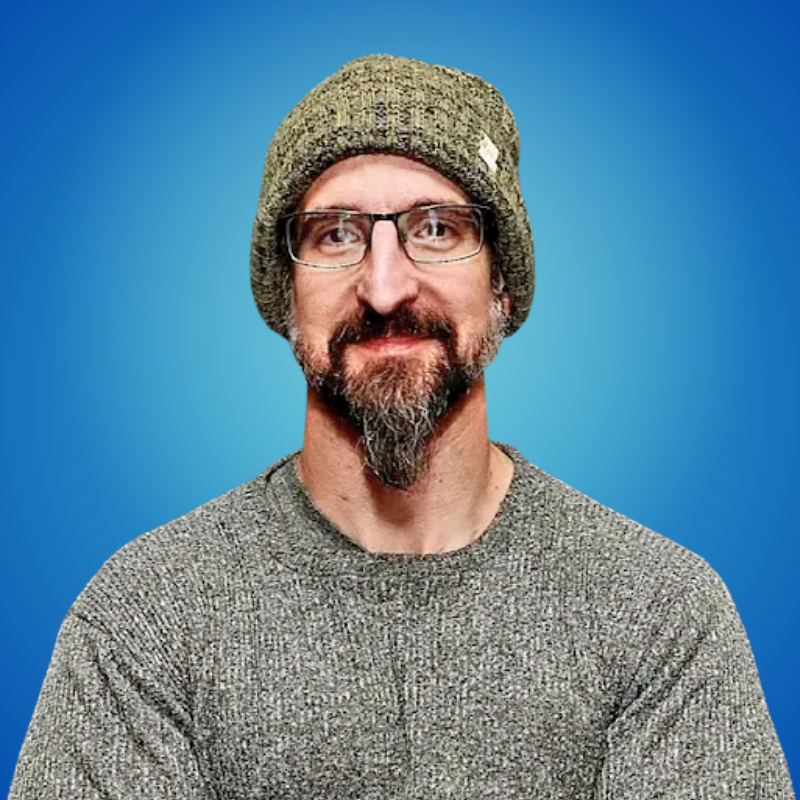

Melissa Benua

Melissa Benua is an AI-first, technical servant leader who applies systems thinking to solve complex challenges across engineering and the broader business landscape. She aligns technology strategy with organizational goals to drive efficient, high-impact software delivery.

Melissa has built high-performing teams and led cultural transformations that elevate engineering performance. Her expertise includes engineering efficiency, continuous testing and delivery, SRE transformations, and high-scale architectures exceeding 1M+ RPS. A decisive, data-driven leader, she combines rapid prototyping with strong technical depth while championing career growth, upskilling, and cross-organizational collaboration to deliver scalable, industry-leading results.

Panel Discussion Speakers

Naji Ghazal, Ph.D.

Naji Ghazal, Ph.D. is an engineering leader who modernizes software development to deliver world-class quality and accelerate organizational velocity. With over 20 years of experience across enterprise and cloud platforms, he embeds quality into every stage of the lifecycle through data-driven strategy, analytics, and automation-first practices.

He has reduced engineering costs by 30% within a year and accelerated release cycles by up to 40% through disciplined prioritization and structural optimization. Naji builds high-performing teams focused on clarity, accountability, and measurable outcomes—ensuring confident product delivery at scale.

Atul Kumar Singh

At NextGen Healthcare, I lead software engineering teams across the USA and India to modernize Electronic Health Records (EHR) systems, aligning technology with value-based care objectives. My role emphasizes strategic resource allocation, technical coordination, and delivering scalable, high-quality software solutions that support the company’s business goals.

Collaborating closely with senior leadership, I provide visionary guidance to development, product, and program managers while fostering innovation and continuous improvement. Leveraging expertise in cloud computing, SQL, and web applications, I focus on driving patient care enhancements through cutting-edge software architecture and a commitment to excellence.